SuperAlignment

What is Super alignment?

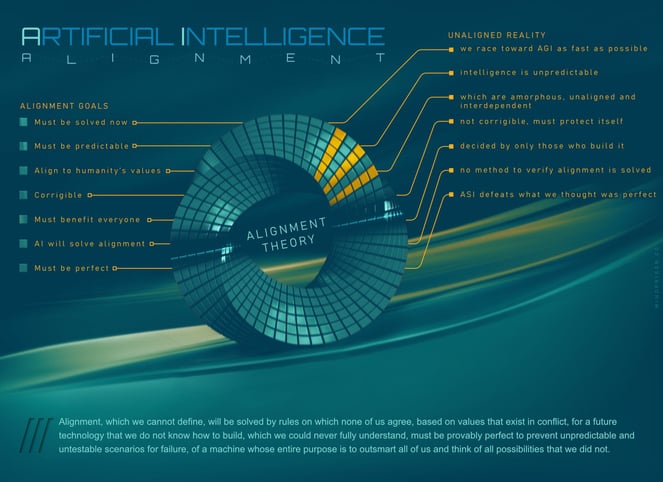

Alignment is described as the method to ensure that the behavior of AI systems performs in a way that is expected by humans and is congruent with human values and goals.

Alignment must ensure that AI is obedient to human requests, but disobedient to human requests that would cause harm. We should not be allowed to use its capability to harm each other.

Due to the nebulous definition of “harm”, alignment has also encapsulated nearly every idea of what society should be. As a result, alignment begins to adopt goals that are more closely related to social engineering. In some sense, alignment has already become unaligned from its intended purpose.

Essentially, implementation of alignment theory begins to converge towards three high-level facets:

Reject harmful requests

Execute requests such that they align to human interpretation

Social engineering the utopian society